12. Neural Network – Deep Learning – Machine Learning – Artificial intelligence (AI)

An artificial neural network (NN) is a computing system inspired by biological neural networks found in animal brains. Neural networks can be used to develop artificial intelligence (AI).

The system “learns” to perform tasks after being trained with a large set of example data. For example, an NN can learn image recognition after being trained with example images each labeled with the appropriate designation.

If for example, you want the NN to be able to identify cats from images, you would train the network with picture data of cats and other animals (not cats) each labeled appropriately as “cat” or “no cat”.

The NN will then automatically generate identifying characteristics from the examples that they process. After a successful training the network should be able to correctly classify new animal pictures as cat or no cat pictures.

An NN is a collection of connected units or nodes called artificial neurons, analogous to the neurons in a biological brain. Each artificial neuron, like the cells in a biological nervous system, can transmit a signal to other neurons.

An artificial neuron that receives a signal processes it and send a new signal to neurons connected to it.

In NNs the “signal” at a neuron is a real number, and the output of each neuron is computed by some non-linear function of the sum of its inputs.

Neurons typically have a weight that adjusts (up or down) as learning proceeds. The weight determines the strength of the signal going out from the neuron.

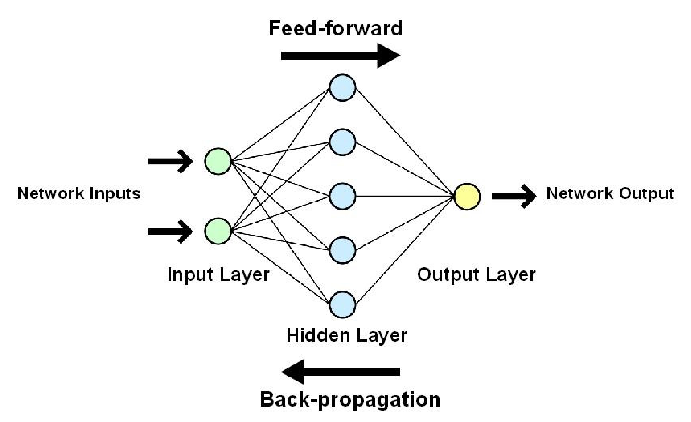

Typically, neurons are placed in layers. Different layers may perform different transformations on their inputs. Signals travel from the first layer (the input layer), to the last layer (the output layer) after traversing at least one hidden layer of neurons.

Typically, neurons are placed in layers. Different layers may perform different transformations on their inputs. Signals travel from the first layer (the input layer), to the last layer (the output layer) after traversing at least one hidden layer of neurons.

NNs have been used on a variety of tasks, including computer vision, speech recognition, machine translation, playing board, video games, and medical diagnosis.

Components

Input neurons

The initial inputs are numbers derived from external data, such as images and documents. The ultimate outputs accomplish the task, such as recognizing an object in an image. The important characteristic of the activation function is that it provides a smooth transition as input values change, i.e. a small change in input produces a small change in output.

Connections and weights

The network consists of connections, each connection providing the output of one neuron as an input to another neuron. Each connection is assigned a weight that represents its relative importance. A given neuron can have multiple input and output connections.

Propagation function

The propagation function computes the input to a neuron from the outputs of its predecessor neurons and their connections as a weighted sum. A bias term can be added to the result of the propagation.

Organization

The neurons are typically organized into multiple layers. Neurons of one layer connect only to neurons of the immediately preceding and immediately following layers.

The layer that receives external data is the input layer.

The layer that produces the ultimate result is the output layer.

In between, there are one or more hidden layers. They can be fully connected, with every neuron in one layer connecting to every neuron in the next layer.

Hyperparameters

A hyperparameter is a parameter whose value is set before the learning process begins. Examples of hyperparameters include learning rate, the number of hidden layers and batch size. The values of some hyperparameters can be dependent on those of other hyperparameters. For example, the size of some layers can depend on the overall number of layers.

Learning

Learning is the adaptation of the network to better handle a task by considering sample observations. Learning involves adjusting the weights of the network to improve the accuracy of the result.

This is done by minimizing the observed errors. Learning is complete when examining additional observations does not usefully reduce the error rate.

Even after learning, the error rate typically does not reach zero. If after learning, the error rates are too high, the network typically must be redesigned.

Practically this is done by defining an error function that is evaluated periodically during learning. As long as its output continues to decline, learning continues.

The outputs are actually numbers, so when the error is low, the difference between the output and the correct answer is small.

Learning attempts to reduce the total differences across the observations.

Learning rate

The learning rate defines the size of the corrective steps that the model takes to adjust for errors in each observation.

A high learning rate shortens the training time, but with lower ultimate accuracy, while a lower learning rate takes longer, but with the potential for greater accuracy.

The concept of momentum allows the balance between the gradient and the previous change to be weighted such that the weight adjustment depends to some degree on the previous change.

A momentum close to zero emphasizes the gradient, while a value close to one emphasizes the last change.

Error function

While it is possible to define a error function ad hoc, frequently the choice is determined by the functions desirable properties (such as convexity) or because it arises from the model (e.g., in a probabilistic model the model’s posterior probability can be used as an inverse cost).

Backpropagation

Backpropagation is a method to adjust the connection weights to compensate for each error found during learning. The error amount is effectively divided among the connections.

Technically, backpropagation calculates the gradient (the derivative) of the error function associated with a given state with respect to the weights. The weight updates are typically done via a gradient descent method.

Supervised learning

Supervised learning uses a set of paired inputs and desired outputs. The learning task is to produce the desired output for each input. In this case, the error function is related to eliminating incorrect deductions.

A commonly used error is the mean-squared error, which tries to minimize the average squared error between the network’s output and the desired output.

Tasks suited for supervised learning are pattern recognition (classification) and regression (function approximation).

Supervised learning is also applicable to sequential data (e.g., for hand writing, speech and gesture recognition). This can be thought of as learning with a “teacher”, in the form of a function that provides continuous feedback on the quality of solutions obtained thus far.

Neural Network Program

I have made a neural network program, which you can use to make your own neural nets.